Sometimes it is useful to have an automated tool to get the full web map of your site. Perhaps not your own web site, since you have already implemented some kind of automatic generation and notification to Google (have not yet?), but a client’s one.

There are a few tools to map an external web site, I tried some in my particular case. They were just adware, or demos, or they obscured the links in the final report… Yeah, of course, sometimes a $30 license is worth it, but you might not want to acquire a new piece of proprietary software every time you need a new feature, might you?

So I decided to write it myself in PHP, not for the money, but for the fun 🙂

The architecture

First of all, a bit of planning the application:

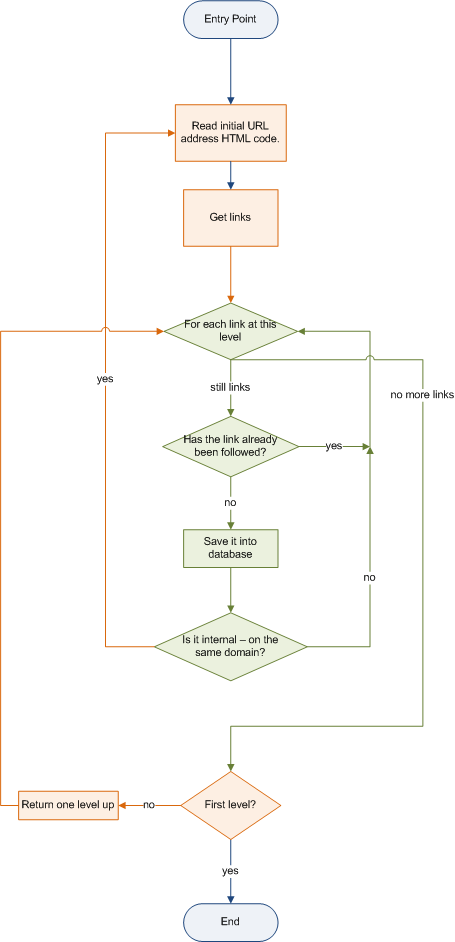

Web mapper work flow diagram

Web mapper work flow diagram (Visio).

Let’s take a look at this work flow diagram. First of all, the mapper reads the entry url address in search of links. The blue color represents the entry and exit points of the application.

Then it reads the HTML code, parses it in search for links, and if found, it runs a loop on each one of them. The green color means the actions taken within the loop. Basically, this involves saving the link into the database, and, if the address is not external (that means, it points to a page within the same domain name), then reading it and starting the process again for this new url.

This practice is commonly known as ‘recursion’, and is represented in orange. When a link within a page is followed, the application has just entered a new recursion level, then reads the HTML code and seeks for links, starting again a new loop. Think of it like a set of Russian dolls: every link of a page contains a page with more links, so there we start a new entire loop from an iteration of the previous one, and so on.

The recursion will end when a page has no links, or all its links are external. Then the application has found a leaf page, nothing to do deeper than that, and can return one level up. When all the leaf pages are read, this algorithm will return to the main entry level, when, after going through all links found, will finish.

This, depending on the web site structure, can take a few minutes to complete all the mapping, so that is better to use a fast and reliable programming language. In my case, I have used PHP for simplicity’s sake, since is the language I am using daily, but on a test server where I can increase execution time. For more intensive uses of this script, I would recommend to have it translated into another language, or to run it from console with the PHP interpreter, not from the web server itself.

In addition, this architecture is really basic, that means that a lot of things can be improved. For instance, a check has been added to avoid following links twice. This, however, is not so easy to implement, since inner links can follow different conventions (“www.domain.com”, “domain.com”, “sub.domain.com”, “/”, “./”). Thus, the code presented below should be used for academic and learning purposes, I can not guarantee that it is going to work perfectly at all.

The code

Let’s present the code now. It has been written for the Code Igniter framework, but can be easily ported to no matter what architecture or language. A few conventions:

- It is based on the class ‘spider’, whose main method ‘crawl()’ launches the initial entry point, and the inner and protected method ‘_crawl()’ implements the recursion.

- All inner and protected methods are preceded by an underscore (_).

- A few configuration attributes allow a sandbox definition, limiting the recursion levels and the domains to be crawled.

- As said before, it is based on Code Igniter, but in this case that is only to provide an easy interface with the database. You can just replace all instances of $this->ci->db with your own MySQL connection class.

Using the class is very simple:

$spider = new spider();

$spider->crawl( array( 'entry' => 'http://jorgealbaladejo.com',

'domains' => array( 'jorgealbaladejo.com' ,

'www.jorgealbaladejo.com',

'jorgealbaladejo.ch',

'jorgealbaladejo.es' )

) );

$spider->printResults();

And finally, the core class code. It is commented so it may be easy to understand, eventually. However, comments, improvements and doubts are welcome, so if you find I am doing something not clearly enough, or you would have just done it in another way, please share your comments! 🙂

/**

* Class spider

* Creates a spider which crawls the internet

*

*/

class spider

{

/**

* @var Code Igniter object for database access

*

*/

var $ci;

/**

* @var maximum recursivity depth

*

*/

var $max_depth = 100;

/**

* @var default domains to restrict crawling

*

*/

var $domains = array('jorgealbaladejo.com','vwww.jorgealbaladejo.com');

/**

* @var indentation level

*

*/

var $indent = 20;

/**

* @var internal links array

*

*/

var $links = array();

/**

* Constructor

*

*/

public function spider()

{

$this->ci =& get_instance();

// load links in database to internal list

$this->_loadLinks();

}

/**

* Entry point for recursive function crawl

*

* @param object $params

* @param array $params['domains']

* @param string $params['entry']

*

* @return boolean

*

*/

public function crawl($params)

{

if (isset($params['domains']))

{

$this->domains = $params['domains'];

}

if (isset($params['entry']))

{

$this->_crawl($params['entry']);

return true;

}

return false;

}

/**

* Recursive crawling function

*

* @param string $page

* @param int $parent [optional]

* @param int $depth of current page [optional]

*

* @return void

*

*/

protected function _crawl($page, $parent = 0, $depth = 0)

{

// vars

$doc = '';

$title = '';

$desc = '';

$out = NULL;

$links = array();

$link = '';

//

// correct url

$page = $this->_buildUrl($page,$parent);

// avoid reading the same url twice

if (array_key_exists($page,$this->links))

{

return false;

}

// only read info for inner pages

if (in_array($this->_getDomain($page),$this->domains))

{

// read page content

$doc = $this->_getHTTPRequest($page);

// get page meta data

list($title, $desc) = $this->_analyzePage($doc,$page);

}

// log into inner array

$this->links[$page] = array( 'url' => $page ,

'title' => $title ,

'description' => $desc );

// write into database

$this->ci->db->query('INSERT INTO web.map VALUES("",' .

'"' . $page . '",' .

'"' . $title . '",' .

'"' . $desc . '",' .

'"' . $parent . '") ');

$parent = $this->ci->db->insert_id();

$this->_printLine($title,$desc,$page,$depth);

// avoid recursion for external domains

if (!in_array($this->_getDomain($page),$this->domains))

{

return;

}

// now get links and launch recursively

$links = $this->_getInnerLinks($doc);

foreach($links as $link)

{

if ($depth < $this->max_depth)

{

$this->_crawl($link,$parent,$depth+1);

}

}

return;

}

/**

* Prints the current links array

*

*

*/

public function printResults()

{

ksort($this->links);

foreach ($this->links AS $link)

{

$this->_printLine($link['title'],$link['description'],$link['url'],0);

}

}

/**

* Preloads the existent links in database

*

*/

private function _loadLinks()

{

$result = $this->ci->db->query('SELECT url,title,description FROM web.map ORDER BY url ASC');

if ($result->num_rows())

{

$results = $result->result();

foreach($results as $r)

{

$this->links[$r->url] = array( 'url' => $r->url ,

'title' => $r->title ,

'description' => $r->description );

}

}

}

/**

* Reads a document for html links

*

* @param string $doc [optional]

*

* @return array of links

*

*/

private function _getInnerLinks($doc = '')

{

$return = array();

$regexp = "]*href=(\"??)([^\" >]*?)\\1[^>]*>(.*)<\/a>";

$match = null;

if(preg_match_all("/$regexp/siU", $doc, $matches, PREG_SET_ORDER))

{

foreach($matches as $match)

{

$return[] = $match[2];

}

}

return $return;

}

/**

* Prints a line for the current url

*

* @param int

*

*/

private function _printLine($title = '', $desc = '', $page = '', $depth = 0)

{

// do not indent at this time

$depth = 0;

//

echo '' .

'

' .

stripslashes($title) .

'

' .

'' .

substr(stripslashes($desc),0,100) .

'' .

'' .

$page .

'

' .

'';

}

/**

* Analyzes a page to get meta information

*

* @param string $doc(cument)

* @param string $page

*

* @return list($title,$description);

*

*/

private function _analyzePage($doc, $page)

{

//

$title = '';

$desc = '';

//

if (eregi ("(.*)", $doc, $out))

{

$title = addslashes($out[1]);

if (strlen($out[1]))

{

$title = substr($title,0,50);

}

}

$out = @get_meta_tags($page);

if (isset($out['description']))

{

$desc = addslashes($out['description']);

}

return array($title,$desc);

}

/**

* Completes a page link with the domain if needed

*

* @param string $page

* @param int $parent

*

* @return string corrected url

*

*/

private function _buildUrl($page,$parent)

{

// prepare url if relative

if (!eregi('http://',$page))

{

// root relative path

if (strpos($page,'/') == 0)

{

$page = 'http://' . $this->domains[0] . $page;

}

// page relative path

else

{

if (strpos($page,'mailto:')<0 && strpos($page,'javascript:')<0)

{

$page = $this->_getUrlByID($parent) . '/' . $page;

}

}

}

// trim final slash

$page = trim($page,'/');

return $page;

}

/**

* Gets url for a given ID

*

* @param int url id

*

* @return string the url

*

*/

private function _getUrlByID($id)

{

$results = $this->ci->db->query('SELECT url FROM web.map WHERE ID = "' . $id . '"');

if ($results->num_rows())

{

$result = $results->result();

return $result[0]->url;

}

return '';

}

/**

* Gets domain name from a URL

*

* @param string url

*

* @return string domain name

*

*/

private function _getDomain($url = '')

{

// get host name from URL

preg_match('@^(?:http://)?([^/]+)@i', $url, $matches);

if (isset($matches[1]))

{

return $matches[1];

}

else

{

return NULL;

}

}

/**

* HTTP helper function.

* Loads an http request and returns result.

*

* @param string $url to request

*

* @return string the result

*

*/

private function _getHTTPRequest($url = '')

{

// vars

$html = '';

$old = '';

$file = '';

//

//configuration

$timeout = 15;

//

// execution

try

{

$file = @fopen($url, 'rb');

if ($file)

{

$html = stream_get_contents($file);

fclose($file);

}

}

catch(Exception $e)

{

$html = 'error';

}

//

return ($html);

}

}